【Text by Observer Net, Yuan Jiaqi】

DeepSeek launches new products, once again causing a stir in the AI circle. On December 1, the Chinese artificial intelligence (AI) startup DeepSeek released two official model versions: DeepSeek-V3.2 and DeepSeek-V3.2-Speciale.

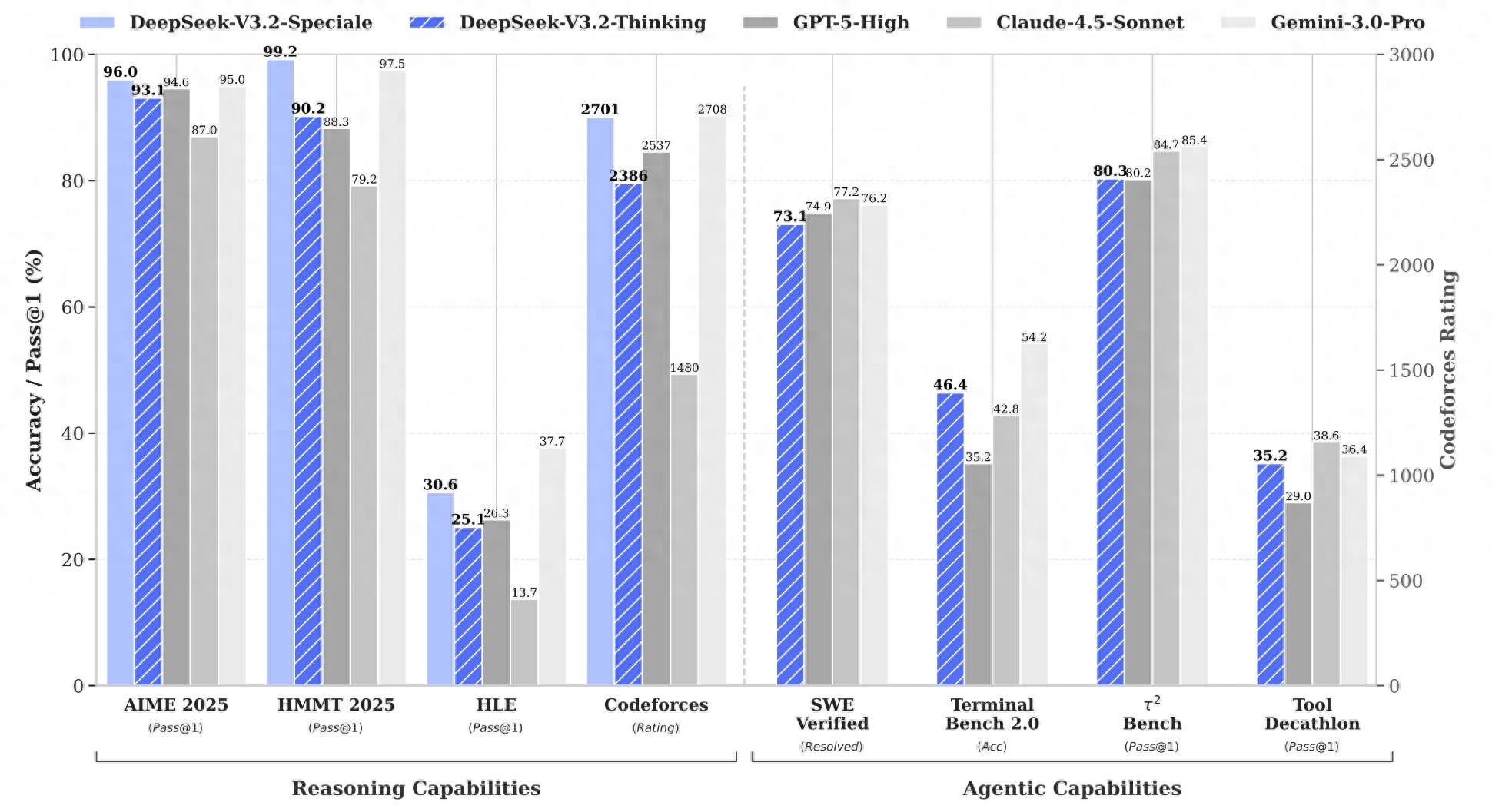

According to the introduction, DeepSeek-V3.2 is positioned as "balanced and practical," achieving the level of OpenAI's GPT-5 in mainstream reasoning benchmark tests; while DeepSeek-V3.2-Speciale, which has significantly enhanced reasoning capabilities, achieved performance comparable to Google DeepMind's newly launched next-generation AI model "Gemini 3.0 Pro" in reasoning benchmark tests.

DeepSeek also revealed that its V3.2-Speciale version achieved gold medal-level performance in international competitions such as the International Mathematical Olympiad (IMO 2025) and the International Olympiad in Informatics (IOI 2025). This achievement directly matches industry giants, and previously only OpenAI and Google DeepMind's internal test models had achieved this feat without being publicly disclosed.

The South China Morning Post reported on December 2 that this technological breakthrough from an open-source lab has sparked widespread discussion in the AI research field, especially since DeepSeek's latest release coincides with the upcoming "Oscars of AI" — the 2025 Conference on Neural Information Processing Systems (NeurIPS).

As one of the most prestigious academic conferences in the field of machine learning and AI globally, the NeurIPS conference is held annually and is classified as an A-class conference by the Chinese Computer Society. In the global ranking of academic journals and conferences published by Google Scholar, it ranks 7th, and together with the International Conference on Machine Learning (ICML) and the International Conference on Learning Representations (ICLR), it is known as the three most difficult, highest-level, and most influential conferences in the AI field, representing the highest level of machine learning and AI today.

Although DeepSeek, which has always been low-key, has not yet announced whether it will send representatives to attend, Florian Brand, who is currently attending the NeurIPS conference in San Diego, could hardly contain his excitement.

This expert, who specializes in the Chinese open-source AI ecosystem, told Hong Kong media that if DeepSeek researchers appear at the event, they are likely to attract significant attention.

He also revealed, “After DeepSeek announced the new model, all related discussion groups were full today.”

Hong Kong media also mentioned that this year's NeurIPS conference adopted a dual venue format for the first time, with events taking place simultaneously in San Diego, California, and Mexico City, the capital of Mexico. This arrangement mainly stems from the organizers' concerns about possible difficulties for international researchers in obtaining U.S. visas. Currently, many Chinese participants have chosen to attend the Mexico City venue.

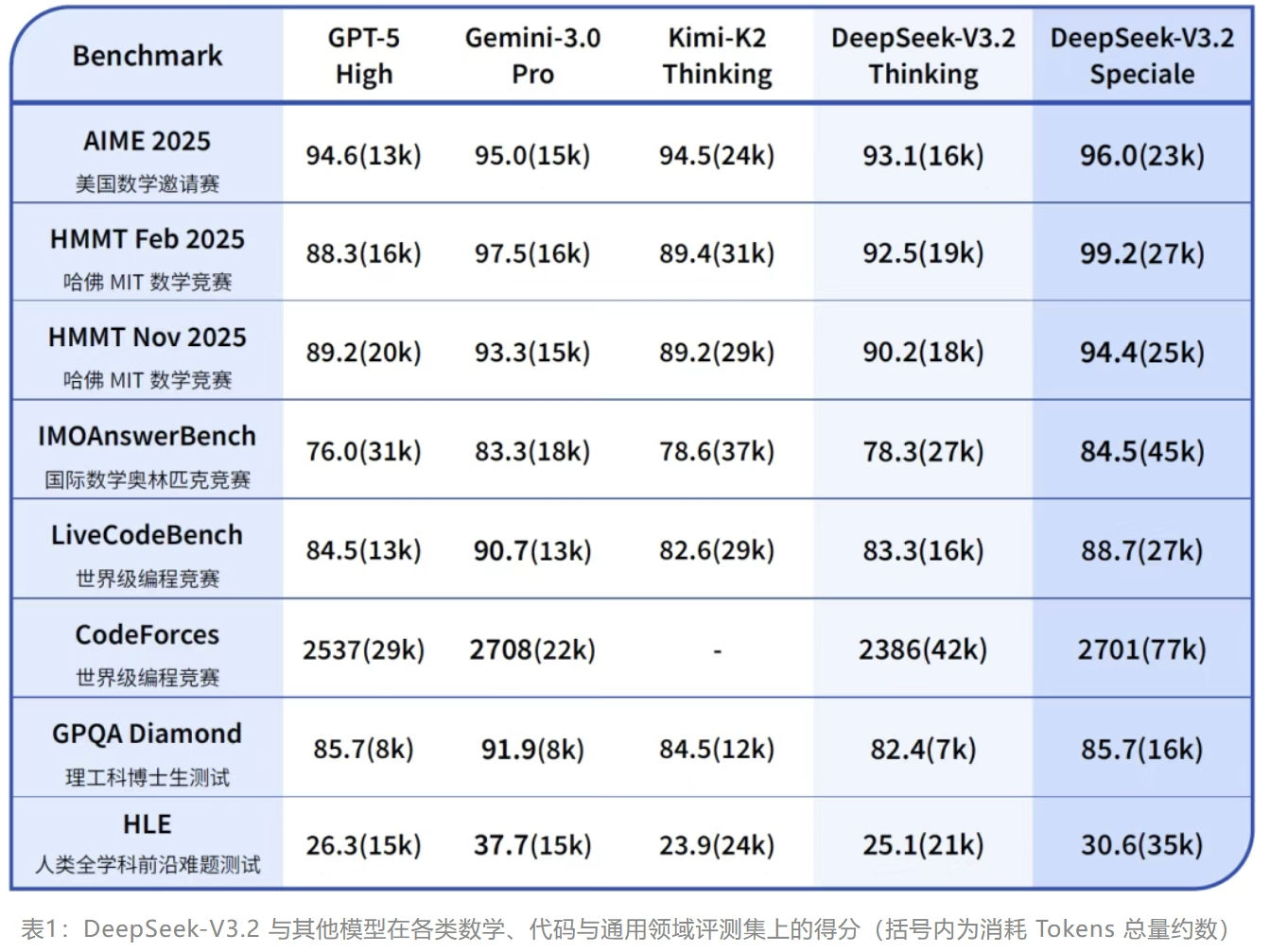

Baseline testing of DeepSeek-V3.2 and its counterparts. Screenshot from DeepSeek technical report

According to the official introduction, DeepSeek-V3.2 is positioned to balance reasoning ability and output length, suitable for daily use, such as question-and-answer scenarios and general intelligent agent tasks. In publicly available reasoning benchmark tests (Benchmark), V3.2 reached the level of GPT-5, slightly lower than Gemini-3.0-Pro; compared to Kimi-K2-Thinking, V3.2's output length was significantly reduced, greatly reducing computational costs and user waiting time.

DeepSeek evaluated that V3.2 "reached the highest level among open-source models in intelligent agent evaluation," significantly narrowing the gap between open-source models and closed-source models, and without special training for the test tools. Additionally, this model is the first one introduced by DeepSeek that integrates thinking into tool usage, and supports both thinking mode and non-thinking mode for tool calls.

DeepSeek-V3.2-Speciale, designed for "extreme reasoning," is an extended version of V3.2 with enhanced long thinking, combining the theorem-proving capabilities of DeepSeek-Math-V2 to explore the boundaries of model capabilities. The model demonstrates excellent instruction following, rigorous mathematical proof, and logical verification abilities, with performance in mainstream reasoning benchmark tests comparable to Gemini-3.0-Pro.

More notably, V3.2-Speciale secured gold medals in the International Mathematical Olympiad (IMO 2025), the China Mathematical Olympiad (CMO 2025), the International Collegiate Programming Contest World Finals (ICPC World Finals 2025), and the International Olympiad in Informatics (IOI 2025). Among these, the ICPC and IOI results reached the level of second and tenth place among human competitors, respectively.

Currently, the official website, app, and API of DeepSeek have been updated to the official version V3.2. The Speciale version is currently available only through a temporary API service for community evaluation and research. The relevant models have been open-sourced.

Screenshot from DeepSeek technical report

Along with the two new models, a complementary technical report was also released. Susan Zhang, a senior research engineer at Google DeepMind, praised it on social media, commending the detailed content of the report and affirming the efforts of the startup company in optimizing model stability after training and enhancing intelligent agent capabilities.

Bloomberg pointed out that this achievement clearly indicates that at least in some core metrics, China's open-source AI systems have already matched the competitiveness of top proprietary models in Silicon Valley.

U.S. media further interpreted that this new product release also sends an important signal: After launching a groundbreaking model that disrupted the AI field in January this year, this influential AI laboratory continues to increase its R&D efforts, aiming to improve the speed and efficiency of AI computing, striving to consolidate its leading position in the Chinese AI sector.

Last week, DeepSeek just released the open-source model DeepSeek-Math-V2, which demonstrated strong theorem-proving capabilities.

Clement Delangue, co-founder and CEO of the open-source AI startup Hugging Face, praised on the social platform X, “Imagine you can have the brain of one of the world's best mathematicians for free.”

He emphasized that users can explore, fine-tune, and optimize this Chinese AI model without restrictions and run it on their own hardware, “No company or government can take it back. This is the best example of AI and knowledge democratization.”

“To my knowledge, no chatbot or API has ever allowed you to access a model at the level of an IMO 2025 gold medalist,” Delangue added.

According to a previous report by the Financial Times, a study by MIT and Hugging Face found that in the past year, the download share of open-source AI models developed by Chinese teams rose to 17%, surpassing the 15.8% share of models developed by American teams. This is the first time that Chinese teams have exceeded their American counterparts in this indicator, gaining a key advantage in the global application of AI technology.

In the global AI boom sweeping the tech industry, companies like OpenAI, Google, and Anthropic tend to adopt a "closed" strategy, maintaining complete control over advanced AI technologies and profiting through user subscriptions and corporate collaborations. In contrast, Chinese tech companies tend to adopt more open strategies, releasing a series of open-source models.

Wendy Chang, a senior analyst at the Mercator Institute for China Studies, pointed out, “Compared to the United States, open source is more of a mainstream trend in China. American companies don't want to do this; they rely on high valuations to make money and don't want to disclose their commercial secrets.”

According to data from MIT and Hugging Face, DeepSeek and Alibaba Cloud's Qwen are the most downloaded Chinese open-source models. The Financial Times reported that the DeepSeek-R1 model shocked Silicon Valley. It uses low cost and computing power but its performance is sufficient to rival top American models, sparking debates about whether American AI laboratories can still maintain their competitive edge.

Shayne Longpre, a researcher at MIT, stated that Chinese tech companies are changing the paradigm of AI model releases, with many Chinese companies releasing models every week or every two weeks, offering various versions for users to choose from. In contrast, American tech companies usually release a series of models every six months or a year.

Industry insiders told the Financial Times that despite the U.S. implementing a series of measures such as chip export controls, China has a large number of outstanding talents who have shown extraordinary creativity in developing open-source models.

“While American AI labs bet on pushing smart breakthroughs to gain huge profits, Chinese open-source model competitors focus more on promoting the widespread use of AI,” The Economist summarized, “If they succeed, DeepSeek's impact may just be the beginning.”

This article is exclusive to Observer Net. Unauthorized reproduction is prohibited.

Original: toutiao.com/article/7579178332865790473/

Statement: The article represents the views of the author.