(By Chen Jishen, Editor: Zhang Guangkai)

On October 20, DeepSeek once again open-sourced a new model.

In GitHub (

https://github.com/deepseek-ai/DeepSeek-OCR), its latest model is named DeepSeek-OCR, which is an OCR (Optical Character Recognition) model. The parameter count of this model is 3B.

This project was completed by three researchers from DeepSeek: Haoran Wei, Yaofeng Sun, and Yukun Li. One of the first authors, Haoran Wei, previously worked at StepZen, and led the development of the GOT-OCR2.0 system aimed at achieving "Second-generation OCR" (arXiv:2409.01704). This project has already received over 7800 stars on GitHub. Therefore, it is natural that he leads DeepSeek's OCR project as well.

DeepSeek states that the DeepSeek-OCR model efficiently compresses long text contexts through optical two-dimensional mapping (compressing text content into visual pixels).

The model mainly consists of two core components: DeepEncoder and DeepSeek3B-MoE-A570M decoder. Among them, DeepEncoder serves as the core engine, maintaining low activation under high-resolution input and achieving high compression ratio, thus generating an appropriate number of visual tokens.

Experimental data shows that when the number of text tokens is within ten times that of visual tokens (i.e., compression rate

This result demonstrates that the method has considerable potential in research directions such as long context compression and LLM memory forgetting mechanisms.

Additionally, DeepSeek-OCR also shows high practical value. In the OmniDocBench benchmark test, it outperformed GOT-OCR2.0 (256 tokens per page) with only 100 visual tokens; simultaneously, it surpassed MinerU2.0 (more than 6000 tokens per page) with less than 800 visual tokens. In actual production environments, a single A100-40G GPU can generate more than 200,000 pages (200k+) of LLM/VLM training data per day.

DeepSeek's explored method can be summarized as: using the visual modality as an efficient medium for compressing text information.

In short, an image containing document text can represent rich information with far fewer tokens than equivalent text, indicating that optical compression through visual tokens can achieve much higher compression rates.

Based on this insight, DeepSeek reexamines visual language models (VLMs) from the perspective of LLMs. Their research focuses on how visual encoders improve the efficiency of LLMs in processing text information, rather than human-adept basic visual question answering (VQA) tasks. DeepSeek states that the OCR task, as an intermediate modality connecting vision and language, provides an ideal testing platform for this visual-text compression paradigm because it establishes a natural compression-decompression mapping between visual and text representations while providing quantifiable evaluation metrics.

Given this, DeepSeek-OCR was born. It is a VLM designed for efficient visual-text compression.

As shown in the figure, DeepSeek-OCR adopts a unified end-to-end VLM architecture composed of an encoder and a decoder.

DeepSeek-OCR's innovative architecture not only achieves efficient visual-text compression but also demonstrates strong performance potential in practical applications.

The core breakthrough of this model lies in its unique dual-component design: DeepEncoder encoder and MoE decoder.

At the encoder level, DeepSeek creatively combines the local perception capabilities of SAM-base with the global understanding advantages of CLIP-large. Like an experienced ancient book restorer, it can accurately identify details of each character with a "microscope" (window attention) and grasp the layout structure of the entire document with a "wide-angle lens" (global attention). Particularly worth noting is its innovative 16-times down-sampling mechanism - which is equivalent to compressing a 300-page book into 20 pages, yet retaining 97% of the key information.

The MoE decoder uses a mixture of experts mechanism like a professional translation team: when facing different languages and formats of documents, the system automatically activates the most skilled 6 "experts" to work together. This dynamic resource allocation allows a 3B parameter large model to have a computational cost of only 570M parameters during actual operation, achieving a processing efficiency of 200,000 pages per day on an A100 graphics card - equivalent to the workload of 100 professional data entry clerks.

In practical tests, DeepSeek-OCR demonstrated astonishing adaptability:

For simple PPT documents, only 64 visual tokens are needed to accurately restore the content, with recognition speed comparable to human scanning;

When processing complex academic papers, 400 tokens can fully retain mathematical formulas, chemical equations, and other professional symbols;

In multi-language mixed document tests, the model successfully identified special scripts such as Arabic and Sinhala;

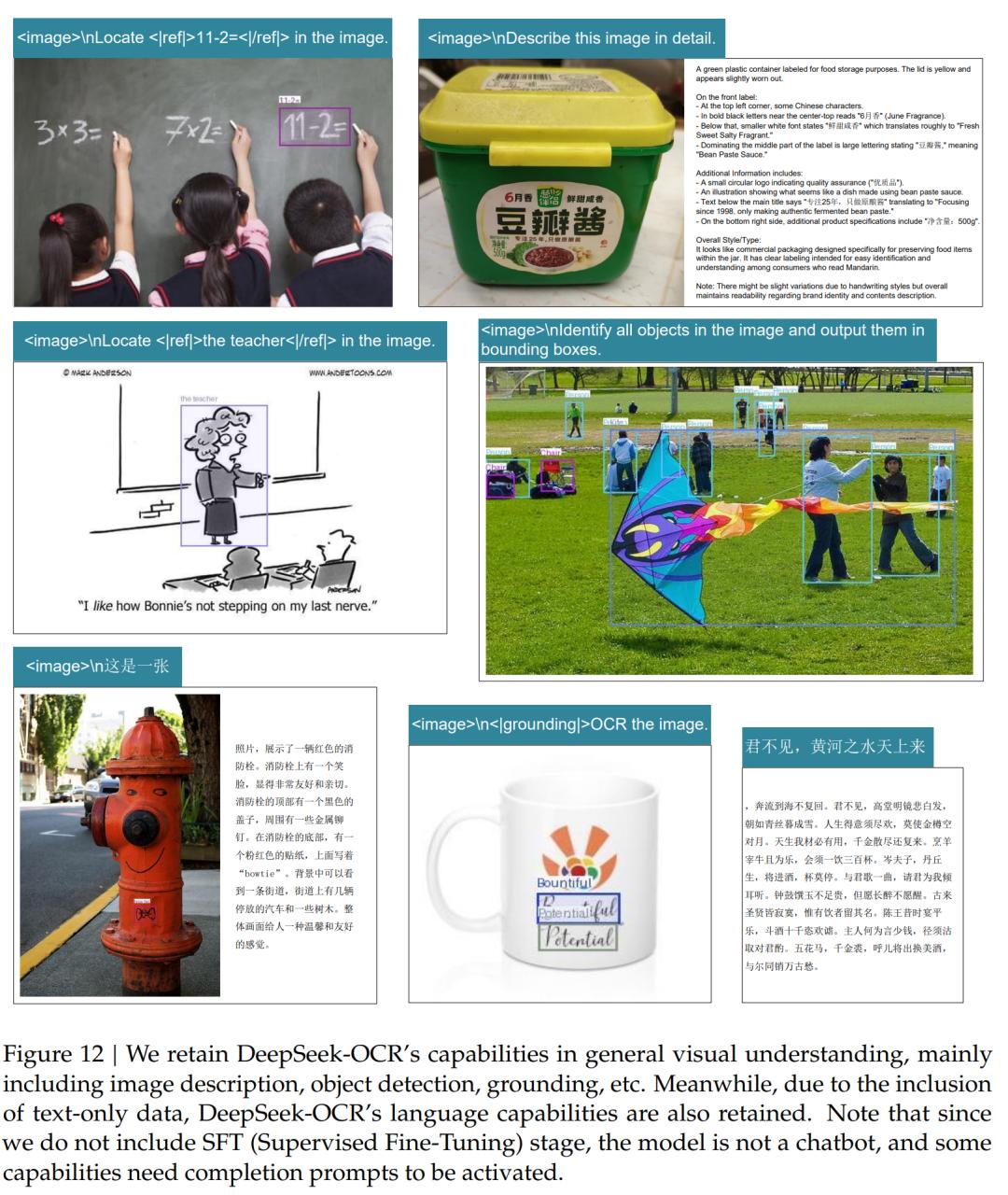

Additionally, DeepSeek-OCR also possesses a certain degree of general image understanding ability.

This also means that DeepSeek-OCR has broad application potential. In the financial field, it can instantly convert thick reports into structured data; in the medical industry, it can quickly digitize historical medical records; for publishing institutions, the efficiency of digitizing ancient texts will increase by dozens of times. More notably, the model's "visual memory" characteristic provides a new approach to overcoming the limitations of large language models' context length.

Original article: https://www.toutiao.com/article/7563253102342865442/

Statement: The article represents the views of the author. Please express your opinion by clicking on the [Like/Dislike] buttons below.