Source of this article: Times Weekly, Author: Zhu Chengcheng

The domestic AI chip industry has added another card that has been officially put on the table.

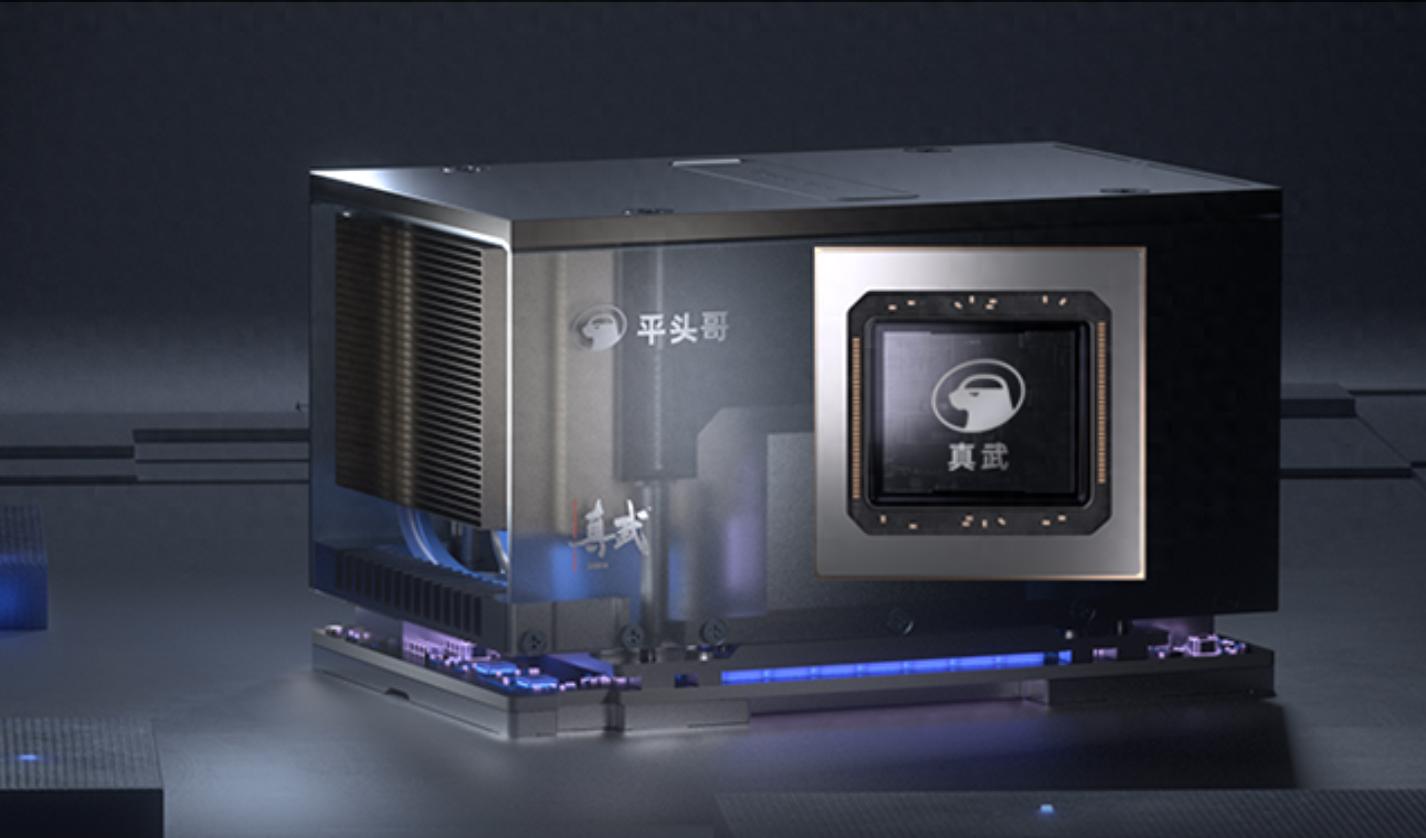

On the morning of January 29th, Alibaba (09988.HK; BABA.NYSE) subsidiary Tuya Semiconductor quietly launched a high-end AI chip called "Zhenwu 810E" on its official website.

This is not an official launch event, but it quickly attracted attention in the industry. In September last year, during a report on the construction progress of China Unicom's Sanjiangyuan Green Power Intelligent Computing Center, the CCTV News broadcast showed a table comparing the key parameters of "domestic cards and NV cards." The key parameters of Alibaba Tuya PPU, Huawei Ascend 910B, and Bitmain 104P were listed side by side with those of NVIDIA A800 and H20.

At that time, Tuya's chip had not yet been publicly named and was only referred to as "PPU" in industry discussions. Now, it finally has an official identity - Zhenwu 810E.

Image source: Tuya's official website

Tuya's official website shows that Zhenwu 810E uses a self-developed parallel computing architecture and on-chip interconnect technology, combined with a full stack of self-developed software stacks, achieving full self-research and development of hardware and software. Its 96GB HBM2e memory, 700GB/s on-chip interconnect bandwidth, and previous parameters in the CCTV footage are basically consistent.

Notably, the release of this chip may reveal the complete puzzle of Alibaba's AI strategy. Tongyi Lab is responsible for large model R&D, Alibaba Cloud provides cloud services, and Tuya focuses on AI chips, together forming the "Tongyunge" system.

A model, two strategies

Currently, there are few companies globally that can form a closed-loop capability in three main lines: large models, cloud computing, and self-developed AI chips. Google and Alibaba are the most typical examples. From the bottom layer of chips to cloud computing platforms and upper-level large models, all can collaborate and evolve within the same system.

This full-stack self-research model can bypass the uncertainty of external supply, ensuring the continuity of model training, inference, and large-scale deployment. At the same time, chip architecture, system software, and model design can undergo deeper collaborative optimization, rather than remaining at the "compatibility level."

"Alibaba and Google both self-research the full stack of 'chips—cloud—models' and coordinate optimization to improve computing power, while also providing AI cloud services externally," said Ding Shaotang, Chief Analyst of GKURC Industry Economic Research Institute, to Times Weekly reporters. The difference lies in that Alibaba Tuya covers multiple types of chips, Qwen follows an open-source route and focuses on domestic scenarios, while Google's TPU specializes in AI acceleration, Gemini is closed-source, and has a mature global layout.

The differentiation in the model layer is accelerating. On January 26th, Tongyi Lab released the flagship inference model Qwen3-Max-Thinking, setting new global records in several authoritative evaluations, with performance comparable to GPT-5.2 and Gemini 3 Pro.

Previously, a research report from Western Securities stated that Alibaba continues to deepen its open-source ecosystem, programming, inference, and enterprise viability with the Qwen3-Max and Qwen3-Next mixed attention and efficient sparse architecture model matrix, while Google, with the release of Gemini 3, has achieved top-tier performance in inference, multimodal capabilities, Agent tool usage, multilingual performance, and long context, establishing a phased advantage in closed-source models.

In specific technical paths, Google primarily uses self-developed TPUs on the chip side, having built a mature training and inference ASIC system. The latest Gemini 3 model was trained on Google's own TPU cluster.

Alibaba's choice is more diverse and flexible. A research report from Western Securities pointed out that Alibaba has built a "one cloud, multiple chips" system based on "Yi Tian + Han Guang + Lingjun platform." In the area of domestic replacement for general inference, the PPU introduced by Alibaba can match the H20 in terms of memory capacity, PCIe, and other indicators.

Industry insiders also revealed that compared to key parameters, the overall performance of the "Zhenwu" PPU exceeds mainstream domestic GPUs and is equivalent to H20.

Wu Zihao, CEO of Ronghe Semiconductor Consulting, further told Times Weekly reporters, "Domestic CSPs (cloud service providers) self-developed AI chips are all in the first tier, such as Baidu Kunlun Chip and Alibaba Tuya's PPU."

"The full-stack closed-loop model is driving AI chips toward specialized architectures, loosening NVIDIA's monopoly," emphasized Ding Shaotang. This model will also intensify the ecological barriers in AI competition. Major players with full-stack capabilities may monopolize the high-end market, pushing global chip competition from single hardware to system-level experience competition.

Product implementation or planning for listing

"Domestic AI application implementation is expanding. Whether it's the implementation of large models on the enterprise side or generative AI applications on the consumer side, they are driving greater scale of computing power demand," said Cui Nan, Executive Director of Savills Greater China, to Times Weekly reporters. Domestic chip manufacturers' technical capabilities and ecological compatibility have significantly improved over the past two years and are now capable of handling larger scale applications.

From a product perspective, according to Tuya's official website information, the "Zhenwu" PPU is designed for core scenarios such as AI training, AI inference, and autonomous driving. In the field of autonomous driving, the "Zhenwu" PPU meets data generation, model training, and cloud simulation to satisfy intelligent driving scenarios. In the AI training field, it is compatible with the mainstream AI ecosystem, adapting to mainstream models, frameworks, operators, and OS. In the AI inference field, it supports mainstream inference engines and provides Tuya's self-developed dedicated inference framework and operator library, combining large-capacity memory to provide targeted optimization for large model inference.

It is reported that the "Zhenwu" PPU has been deployed in multiple ten-thousand-card clusters on Alibaba Cloud, serving over 400 customers including State Grid, Chinese Academy of Sciences, XPeng Motors, and Sina Weibo.

As product implementation accelerates, capital-related actions are beginning to emerge. Recently, media reports indicated that Alibaba Group is planning to reorganize its chip design business unit, Tuya Semiconductor, into an independent entity partially owned by employees, and consider initiating an initial public offering (IPO) on this basis.

Morgan Stanley's research report estimates Tuya's potential valuation between $25 billion and $62 billion, accounting for approximately 6% to 14% of Alibaba's current market value. The report emphasized that this valuation is a preliminary estimate based on the enterprise value-to-revenue (EV/Revenue) multiples of peers such as Cambricon and Kunlun Chip, with aggressive assumptions about 2026 revenue. The actual value still highly depends on future business scale, competitiveness, and final transaction structure.

Previously, Baidu (09888.HK)-affiliated AI chip company also started the listing process. On January 2nd, Baidu announced in Hong Kong that Kunlun Chip submitted a listing application form to the Hong Kong Stock Exchange in secret on January 1, 2026, to apply for listing on the main board of the Hong Kong Stock Exchange.

In Cui Nan's view, large models are gradually becoming the infrastructure for industrial intelligence. Not only internet companies are increasing their investment, but traditional industries such as finance, energy, manufacturing, and healthcare are also actively exploring AI applications. This means that the long-term demand for inference and training computing power will remain high. "In the next few years, the domestic AI computing chip market will not only grow rapidly in scale, but more importantly, AI computing chips will become one of the most strategically significant links in the entire AI industry chain."

Original: toutiao.com/article/7600687948161499654/

Statement: The article represents the views of the author themselves.