The Tongyi team of Alibaba made a powerful launch with four consecutive releases, sweeping the GitHub open-source rankings.

This week, from July 22 to 25, Alibaba successively released Qwen3-235B non-thinking version, Qwen3-Coder programming model,

Qwen3-235B-A22B-Thinking-2507 reasoning model, and the WebSailor AI Agent framework. These four products swept the open-source rankings in the fields of basic models, programming models, reasoning models, and intelligent agents.

Authoritative institution Artificial Analysis also directly commented:

Tongyi Qianwen 3 is the most intelligent non-thinking basic model in the world.

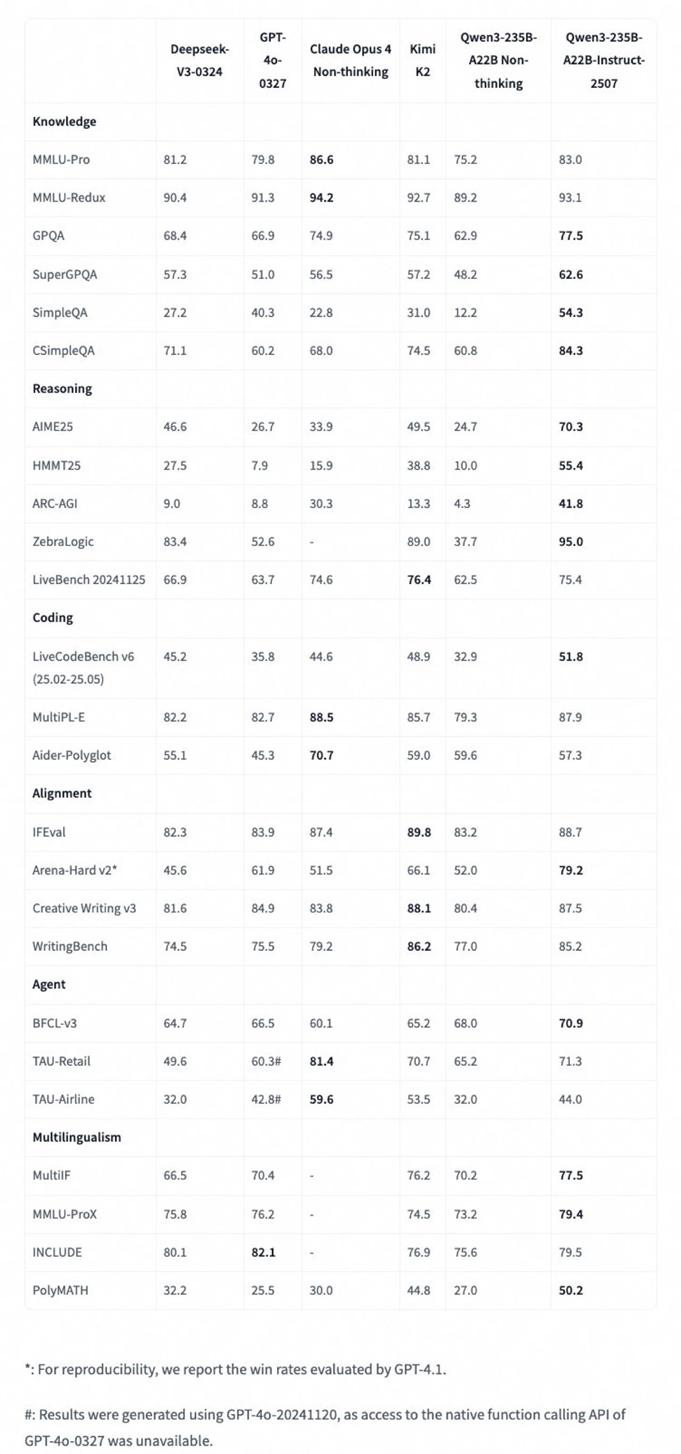

Non-thinking Models Can Also Perform "Excellently"

According to HardAI, on Tuesday morning, the Tongyi Qianwen team of Alibaba launched the latest non-thinking mode (Non-thinking) model, named

Qwen3-235B-A22B-Instruct-2507-FP8.

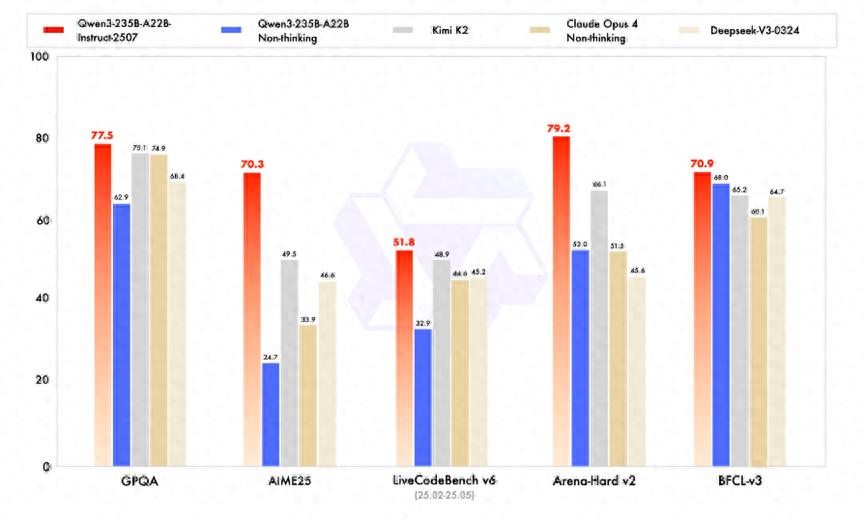

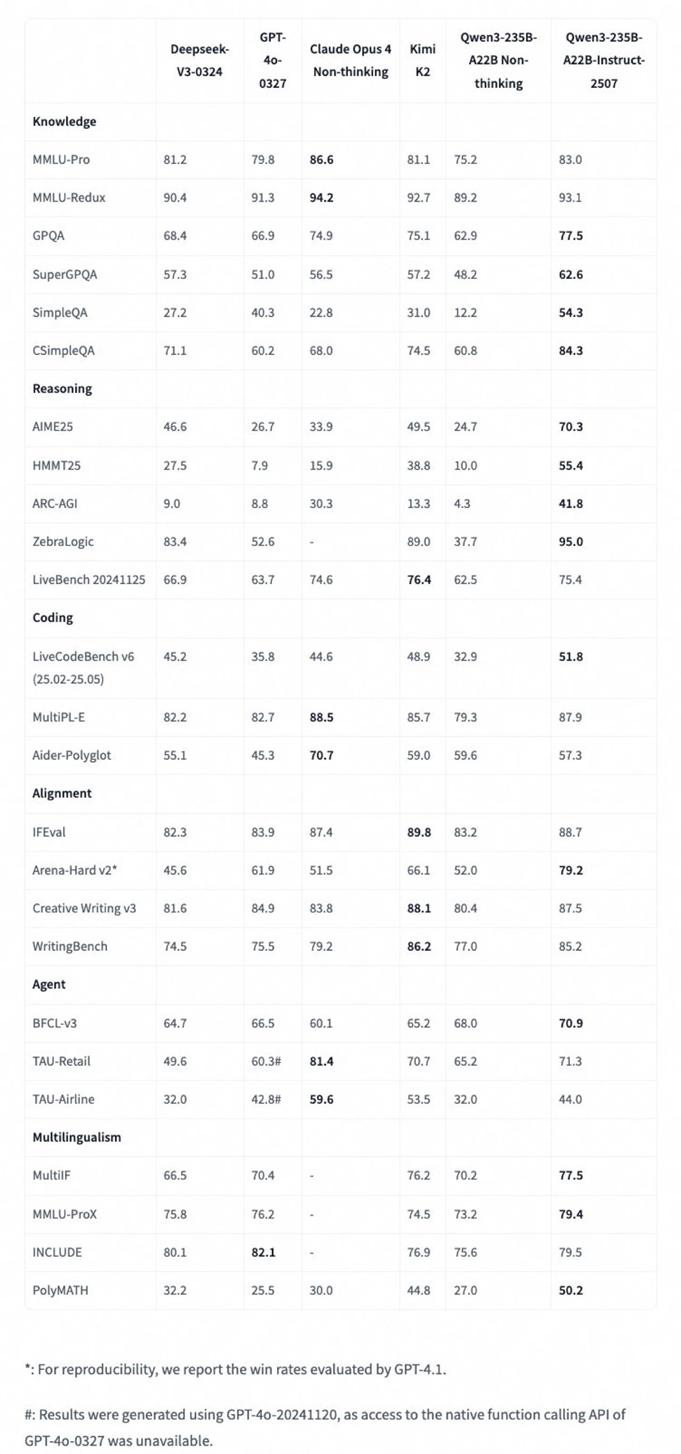

This non-thinking model performed excellently in multiple key benchmark tests. Not only did it comprehensively surpass top open-source models such as Kimi-K2, but it also led top closed-source models such as Claude-Opus4-Non-thinking.

Notably, this update of the Qwen3 model excelled particularly in Agent capabilities: it performed outstandingly in the BFCL (Agent capability) evaluation. This means that the model has reached a new height in understanding complex instructions, autonomous planning, and calling tools to complete tasks. "Focusing on Agents" will be the core competitiveness of future AI applications.

Programming Model Causes a Stir in the Community

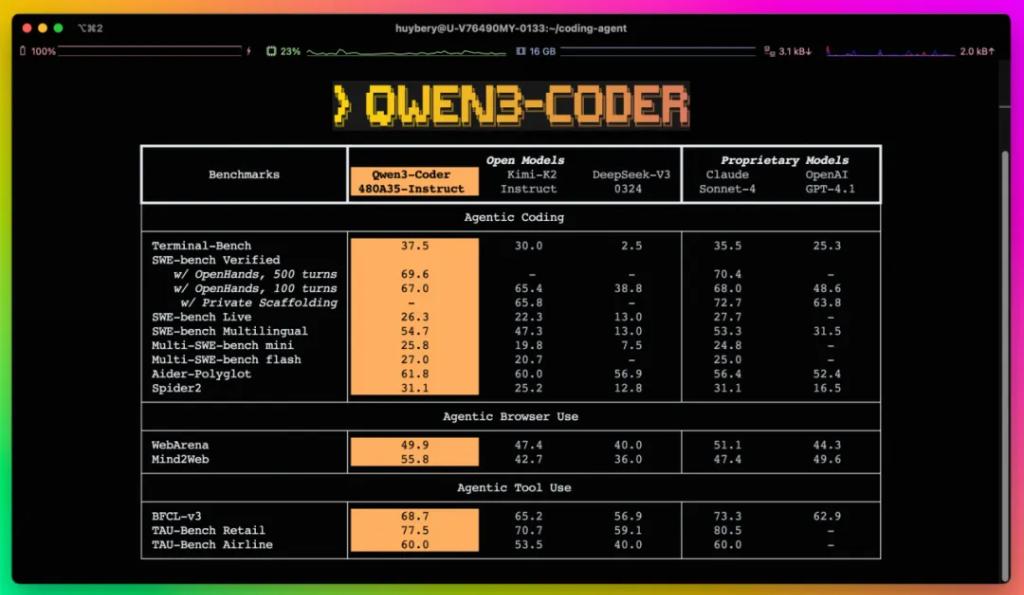

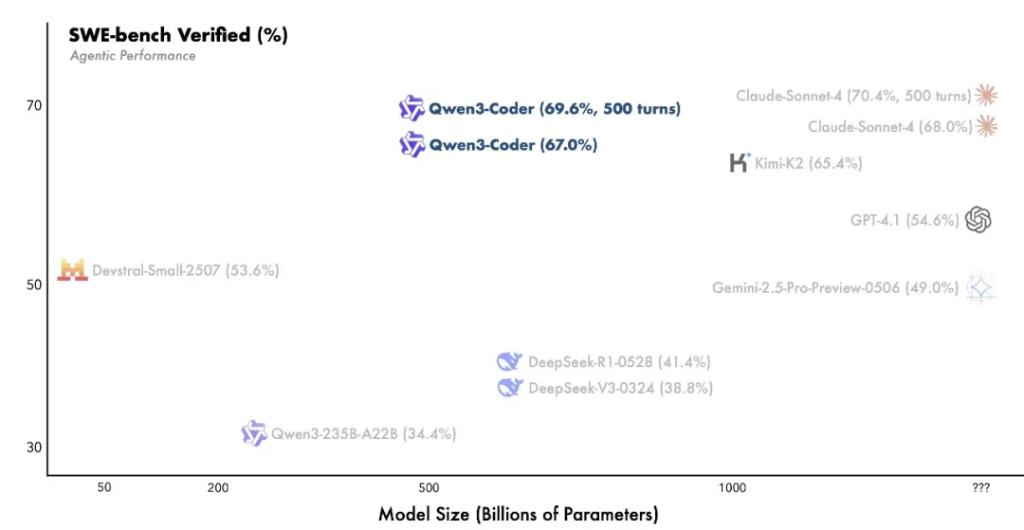

The Qwen3-Coder released on July 23 caused a sensation in the global developer community.

Wall Street Observer previously mentioned that this programming model based on MoE architecture has 480B total parameters, 35B activated parameters, natively supports 256K context, and can be scaled up to 1M.

In the SWE-bench Verified benchmark test, which developers pay the most attention to, Qwen3-Coder achieved the best performance among open-source models.

The model was trained on a scale of 7.5 trillion tokens, including 70% code data, and through long-term reinforcement learning and large-scale practical training in 20,000 virtual environments, it demonstrated excellent capabilities in real-world multi-turn interactive tasks.

Alibaba also launched a complementary command-line tool called Qwen Code, providing developers with a complete programming solution.

Technology leaders have praised Qwen3-Coder, for example, Aravind Srinivas, CEO of Perplexity, highly commended the strength of Qwen3-coder:

The achievements are amazing, open source is winning.

Jack Dorsey, the founder of Twitter, emphasized that Qwen3 and Goose — the AI Agent framework developed by Block company — work very well together:

Goose combined with Qwen3-Coder equals wow

AI Agent Framework Challenges Closed-Source Monopoly

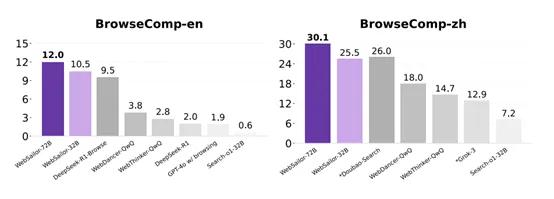

The WebSailor AI Agent framework, simultaneously open-sourced by Alibaba's Tongyi Lab, directly targets OpenAI's Deep Research product.

This framework significantly outperformed all open-source agents in the BrowseComp-en/zh test, comparable to proprietary closed-source models.

WebSailor adopts a dual technological architecture of complex task generation and reinforcement learning modules. By building complex knowledge graphs and dynamic sampling strategies, the system can efficiently retrieve and reason through massive information.

Aside from its outstanding performance in complex tasks, WebSailor also performs well in simple tasks. For example, in the SimpleQA benchmark test, WebSailor's performance exceeded all other model products.

This project has received over 5,000 stars on GitHub and once ranked first in daily growth trends.

The core technology of WebSailor mainly revolves around complex task generation and reinforcement learning modules. These two modules work together to jointly enhance the performance of open-source agents in complex information retrieval tasks.

The open-sourcing of this framework holds significant meaning, breaking the monopoly of closed-source systems in the field of information retrieval and providing global developers with an open-source solution comparable to Deep Research.

Reasoning Model Rises to the Top of the Global Open-Source Champion

The Qwen3-235B-A22B-Thinking-2507 released on July 25 became the most important product of the week.

- AIME25 (Mathematics) reached 92.3 points.

- LiveCodeBench v6 (Programming) obtained 74.1 points.

- WritingBench (Writing) reached 88.3 points.

- PolyMATH (Multilingual Mathematics) obtained 60.1 points.

Looking at more detailed ranking performances, the Qwen3 reasoning model is also no less impressive compared to other models (except R1, others are top closed-source models).

This model uses the MoE architecture, with a total parameter count of 235B, 22B activated parameters, 94 layers, and 128 expert systems, natively supporting a context length of 262,144 tokens. It is specifically designed for thinking mode, with default chat templates automatically including thinking tags, providing strong support for deep reasoning.

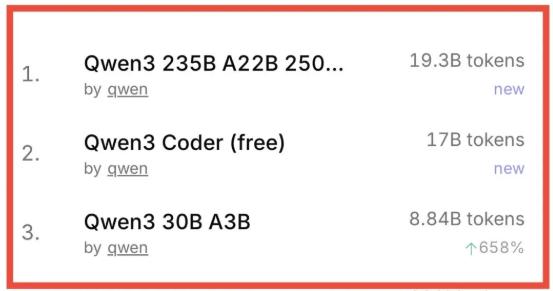

OpenRouter data shows that the API call volume of Tongyi Qianwen has surged in recent days, exceeding 100 billion tokens, occupying the top three most popular models. This data directly reflects the market's recognition of Alibaba's open-source models.

Global netizens were stunned by Tongyi's strongest reasoning model. One netizen directly stated:

China's open-source o4-mini.

AI Thinkers also commented:

China just released a monster-level AI model.

This article comes from Wall Street Observer. Welcome to download the APP to see more.

Original: https://www.toutiao.com/article/7531240831643107879/

Statement: The article represents the personal views of the author. Please express your opinion by clicking the [Like/Dislike] button below.