【CNMO Technology News】On August 21, DeepSeek officially released its latest large language model, DeepSeek-V3.1, marking another significant advancement in the architecture design and agent capabilities of this series. This update not only optimizes model inference efficiency but also achieves notable breakthroughs in tool calling, multi-task processing, and real-world application scenarios, aiming to provide users with more efficient and reliable AI services.

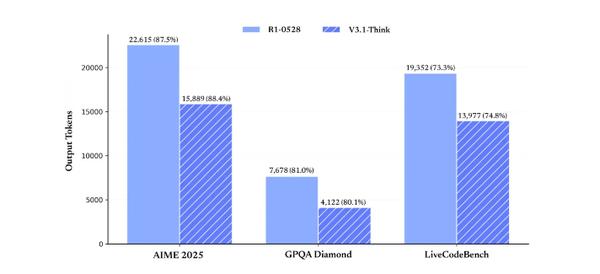

The newly released V3.1 version introduces a hybrid inference architecture, supporting seamless switching between "thinking mode" and "non-thinking mode." Users can choose different modes via the "Deep Thinking" button on the official app or web interface, balancing response speed and inference depth. According to the official announcement, the new model reduces the number of output tokens by 20% to 50% compared to the previous R1-0528 version while maintaining performance, significantly improving response efficiency. In benchmark tests such as AIME 2025 and GPQA, V3.1-Think performs on par or even slightly better than the previous version, with lower resource consumption.

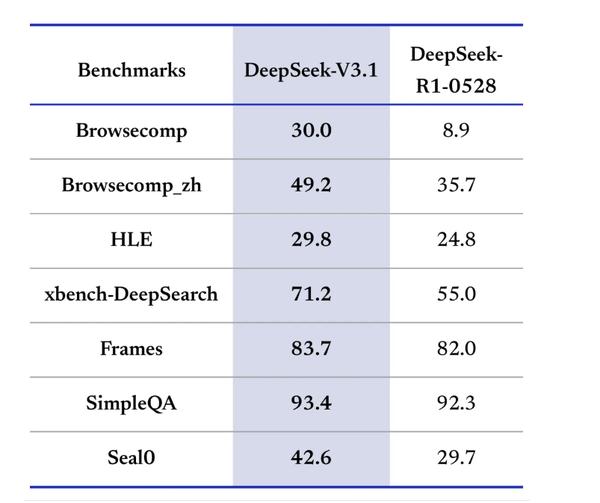

In terms of agent (Agent) capabilities, V3.1 demonstrates stronger tool calling abilities through post-training optimization, particularly in programming, searching, and complex task execution. Its completion efficiency outperforms previous versions in SWE code repair and Terminal-Bench terminal tasks. The search agent evaluation shows that V3.1 excels in multi-step reasoning and cross-disciplinary problem-solving, significantly outperforming R1-0528. Additionally, the API has been updated, offering two interfaces: deepseek-chat (non-thinking) and deepseek-reasoner (thinking), both with extended context lengths up to 128K and support for strict mode function calls, ensuring outputs conform to predefined schemas.

To enhance compatibility, the API now supports the Anthropic format, facilitating integration into the Claude ecosystem. In terms of models, V3.1 Base is now open-sourced on Hugging Face and Moba Platform, including retrained 840B tokens, using FP8 precision, and updated tokenizers and conversation templates. The official reminds developers to be aware of version differences.

Additionally, starting from September 6, DeepSeek will adjust the API billing standards, removing night-time discounts, with specific details available on the platform's announcements.

Original article: https://www.toutiao.com/article/7540928684249596470/

Statement: This article represents the views of the author. Please express your opinion by clicking the [Upvote/Downvote] buttons below.