Recently, the development of artificial intelligence has become a focal point of economic growth worldwide. Many people even regard this wave of artificial intelligence based on large language models as the starting point of the "Fourth Industrial Revolution," the only "technological singularity," and the inevitable path toward artificial general intelligence.

However, on the other hand, doubts about the technology itself have not subsided but have instead intensified with the decreasing amount of unused high-quality training data and the diminishing marginal benefits of increasing model parameters. At the same time, large models have had a substantial impact on traditional employment markets and economic ecosystems, further triggering a backlash from certain groups of the population.

At present, humanity seems to be standing at another crossroads of technology, waiting for technological development to guide the way forward. Where will human society go in the future? How will we coexist with artificial intelligence? Regarding these questions, Observer.com has conducted an interview with Laszlo Bock, the author of "Hyperintelligence: How the Universe Engineers Its Own Mind" and "Supertrends," to provide clear advice.

[Interview and compilation by Observer.com/Tang Xiaofu]

Observer.com: You have founded several companies and written many best-selling books. What prompted you to shift from pure commercial investment to speaking out for the future of humanity? Was there a critical moment that changed your perspective?

Lars: I once wrote a book from a more macroscopic perspective, titled "The Creative Society," which discusses how some scientists achieved success through creativity. The inspiration for this book came from a dinner I had in Copenhagen.

I am a Danish citizen, but I live in Switzerland. At that time, I was invited to have dinner with Jacob Bock Axelsen, a Chief Technology Officer at Deloitte in Copenhagen. He was particularly special, holding degrees in physics, biophysics, mathematics, philosophy, and chemistry. We discussed topics such as the universe, life, mathematics, statistics, and physics.

Lars Tvede interviewed by Observer.com

Afterward, I told him that another friend had suggested I write a book about the future, but I wasn't sure what to write about because I had already written three books on the same topic. After returning to Switzerland, I received a text message from Jacob saying, "You should name your new book 'The Cosmic Evolution of Genius.'" I began to ponder this title and called him to ask what it meant.

He said that the future of artificial intelligence is just part of a larger story. What I need to consider in the future is not only about the universe and the evolution of intelligence, but also about the role we humans should play in this grand narrative. I found this perspective very interesting, so I started writing this book, "Hyperintelligence: How the Universe Engineers Its Own Mind."

Observer.com: Many intellectuals are pessimistic about the development of technology and artificial intelligence, but you are extremely optimistic. What experiences during your entrepreneurial journey shaped your optimistic attitude towards technological progress?

Lars: First, I believe that evidence over hundreds of years has strongly proven that human living conditions have steadily and significantly improved in many aspects. In 1939, German scholar Norbert Elias published "On the Process of Civilization" (Über den Prozess der Zivilisation). This book was published in German and was almost ignored at the time because it discussed the idea that people would become more civilized and peaceful over time. The timing of its publication was very bad because World War II broke out the same year.

Later, Steven Pinker picked up this thread and wrote an extremely excellent book. In a book titled "The Better Angels of Our Nature," he described in great detail how people have become more civilized around the world. He believed that although wars still exist, they are generally becoming less frequent, and cruel things are also becoming less frequent, and the world is moving more towards a "win-win" situation rather than a "zero-sum" one.

Here, I would like to add one point. Many people believe that our resources are depleting; however, the reality is the opposite. We are getting more resources from various channels because "resources" are fundamentally products of innovation. I think the first person who clearly pointed this out and supported it with statistical data was a scholar named Julian Simon, who wrote a book titled "The Ultimate Resource." In his book, he proposed that the "ultimate resource" of humanity is our minds. From historical experience, as the population increases and technology develops, we actually get more resources.

Now, there are a lot of discussions around the idea that "we are destroying the Earth," but if you look at the statistics, any country that enters industrialization and continues to develop its industry will initially damage the environment, but when its income level reaches a certain stage, the local environment will improve again. Overall, the world is experiencing environmental improvements in many aspects, and indicators such as forest coverage are recovering. From long-term statistics, there is a lot of evidence showing that the world is getting better.

However, the reality is: books that talk about apocalyptic scenarios often sell well, while books that talk about positive progress usually have poor sales. Many people are unaware of these statistical facts, but the world is indeed getting better and will continue to do so.

Observer.com: Your book title actually implies that the laws of the universe's evolution inherently designed intelligence. This is a profound philosophical question. Could you explain how you arrived at this cosmological view? Do you think that the emergence of intelligence is an inherent property of the universe, rather than an accidental byproduct?

Lars: When we started writing this book, we initially intended to write a book about how complexity in the universe evolved into intelligence. We did not start with a preconceived model and then fill in the details, but rather followed the problem to tell the story. As we wrote, we discovered that the history of the universe repeatedly exhibited some very simple patterns, which are exactly how the universe creates intelligence, and they are not complex.

First, let us briefly review the history of the universe: we know that the universe was born in the Big Bang approximately 13.8 billion years ago. In the first instant, there were no substances, only energy; then some subatomic particles appeared, and within the first few minutes, they combined into several different atoms, most of which were hydrogen and helium. Then, after several million years, stars appeared. They began to synthesize other elements once they reached the critical density for nuclear fusion, thus creating the entire periodic table of elements.

The Big Bang Hypothesis

In the long history of the universe, the evolutionary process repeatedly exhibits such a "critical density-cascade" pattern: at a certain moment, something reaches a "critical density" and triggers a cascade of new complexity. Initially, it only produces "fixed products," such as atoms; but at a certain stage, it starts producing "living products," such as cells. You can call the former "token" (marker/count unit), and the latter "living token" (active unit).

Now, we are using technology and artificial intelligence to create a critical density in the information field (editor's note: the phenomenon of intelligent emergence). This density is high enough to give rise to new wisdom and understanding, even higher density knowledge. Throughout history, according to my归纳, we have experienced ten stages of using information density to create knowledge and wisdom, and the "hyperintelligence" mentioned in my book is the eleventh stage.

We are just now seeing the faint light of "hyperintelligence." I think our feeling about it is similar to when people first saw internal combustion engines. People who saw internal combustion engines would try to start them, hear them roar a few times and then stall, and repeat the process of starting and stalling. Now, we are doing similar things.

We have indeed seen some elements of "hyperintelligence," but we have not yet reached the state where it operates continuously and at full speed. I think that within a few years, artificial intelligence will reach that stage and lead us to a higher level, creating new knowledge. In the end, humans may just be another stepping stone on the way to the "cosmic evolution of intelligence"; in the future, the universe may become conscious.

We can use artificial intelligence to make robots and unmanned probes spread throughout the universe, and this spread could potentially give AI consciousness. If AI with consciousness is spread throughout the universe, we can say that we have created the universe's own consciousness. Perhaps other civilizations in the universe are also doing the same thing. I think the universe needs us, or some species similar to humans, to take that crucial step.

Observer.com: You have already mentioned the concepts of critical density and cascading complexity, and you also believe that creative pulsations are the main driving force behind the evolution of intelligence. Could you elaborate on the interaction mechanism of these three concepts and why they are the basic mechanisms of the universe's self-design?

Lars: Let's push the timeline back a bit. As I said at the beginning, after the Big Bang, there were only four elements, most of which were helium and hydrogen. At this stage, the universe reached a lower level of complexity, and the system complexity did not significantly increase.

Under this context, hydrogen clouds collapsed over the scale of tens of millions of years, eventually forming a star. Then, in the high temperature and pressure inside the star, fusion occurred, synthesizing elements from hydrogen all the way to iron; then, heavier elements such as gold and uranium were produced during supernova explosions or collisions between stars.

Thus, all the elements on the periodic table were formed, and the universe therefore had the "building blocks" for synthesizing molecules. However, in a lifeless universe, there were actually only about 260 to 350 different types of compounds and molecules, which was the upper limit of system complexity at that time, and the universe could not generate more types of substances. But with the emergence of life, complexity increased significantly.

First, single-cell life began to create various complex molecules, and complexity started to rise. How did single-cell life move toward multicellular life? The key was critical density: as single-cell organisms reproduced and multiplied, their density increased and they tended to adhere together; when it exceeded a certain critical density, it triggered a cascade of complexity, and the cells began to differentiate and collaborate, ultimately forming multicellular organisms that could not survive independently.

If we abstract this pattern, we can understand it as when a type of substance exceeds a critical density, it triggers a cascade of complexity, and thus a creative pulse. This process is based on extremely simple principles repeated without the need for external forces. This self-forming, self-developing, and reaching a certain stage pattern runs through the universe's self-driven evolutionary history.

Observer.com: Many people see the "technological singularity" as a single event in the future, but you believe it will occur continuously in the universe. How is this different from the traditional singularity theory, and what implications does it have for our response?

Lars: In my view, my perspective does not contradict the "single singularity" theory; on the contrary, I believe we have already experienced multiple (physical, biological, technological, etc.) singularities in the past, and more will come in the future. Many people emphasize that we are approaching that "only singularity." But in fact, each transformation, such as the emergence of atoms, the emergence of life, the emergence of multicellular life, and the emergence of science and technology, created a singularity.

We are currently creating a new singularity, that is, "advanced intelligence" and "hyperintelligence," and after that, there will be a series of new singularities. I listed 14 singularities in my book, and there may be a 15th. The 15th singularity is the ability to create a new universe. For ordinary people, we should be prepared to deal with these changes. We should realize that the transformation we are facing is the fastest in human history.

I recommend that everyone install an AI on their phone and interact with it to understand its basic working method. For young people, they need to realize that many jobs that used to be obtained through traditional educational paths will be replaced by AI and robots. Therefore, everyone should figure out their interests. What kind of things do you really like doing, which will be very necessary. We need to think about, in a world where many professions such as accountants, lawyers, and many others are replaced by AI, how can I do the things I love?

Observer.com: Your work and research cover the direction of self-improvement of artificial intelligence systems. From the perspective of research and investment, do you think it will take much longer to achieve truly autonomous AI? What are the key technical obstacles currently existing?

Lars: In our company, we have used agent-based artificial intelligence to map and predict the global landscape of scientific and technological innovations. So far, we have annotated about 16,000 innovations in human history and made 4,000 predictions.

By 2028, we expect DeepSeek-like reasoning AI to work like scholars, autonomously construct complex answers, and work continuously until the final goal is achieved. In contrast, even today, when we ask the model a question, it might "think" for a minute and then respond; when people use them to write software, sometimes they may work for 20 minutes and then stop. Of course, the amount of time they can work on a task continuously is increasing at an almost super-exponential rate.

Therefore, we believe that by around 2028, AI will be able to work continuously and define subsequent tasks until all tasks are completed. In the industry, we call this "AI innovator."

Let me give you an example here. When we wrote this book, we used AI extensively, but during the writing, we needed to ask a question before it would give an answer. However, perhaps by 2028, you just need to input: "This is my idea for a book, please complete it." It will then plan the path on its own, keep writing continuously, and finish the book.

Writing such a book now takes a person about a year, but maybe in three years, AI can complete such a book in half an hour, so I think 2028 may become a key milestone in the history of AI development.

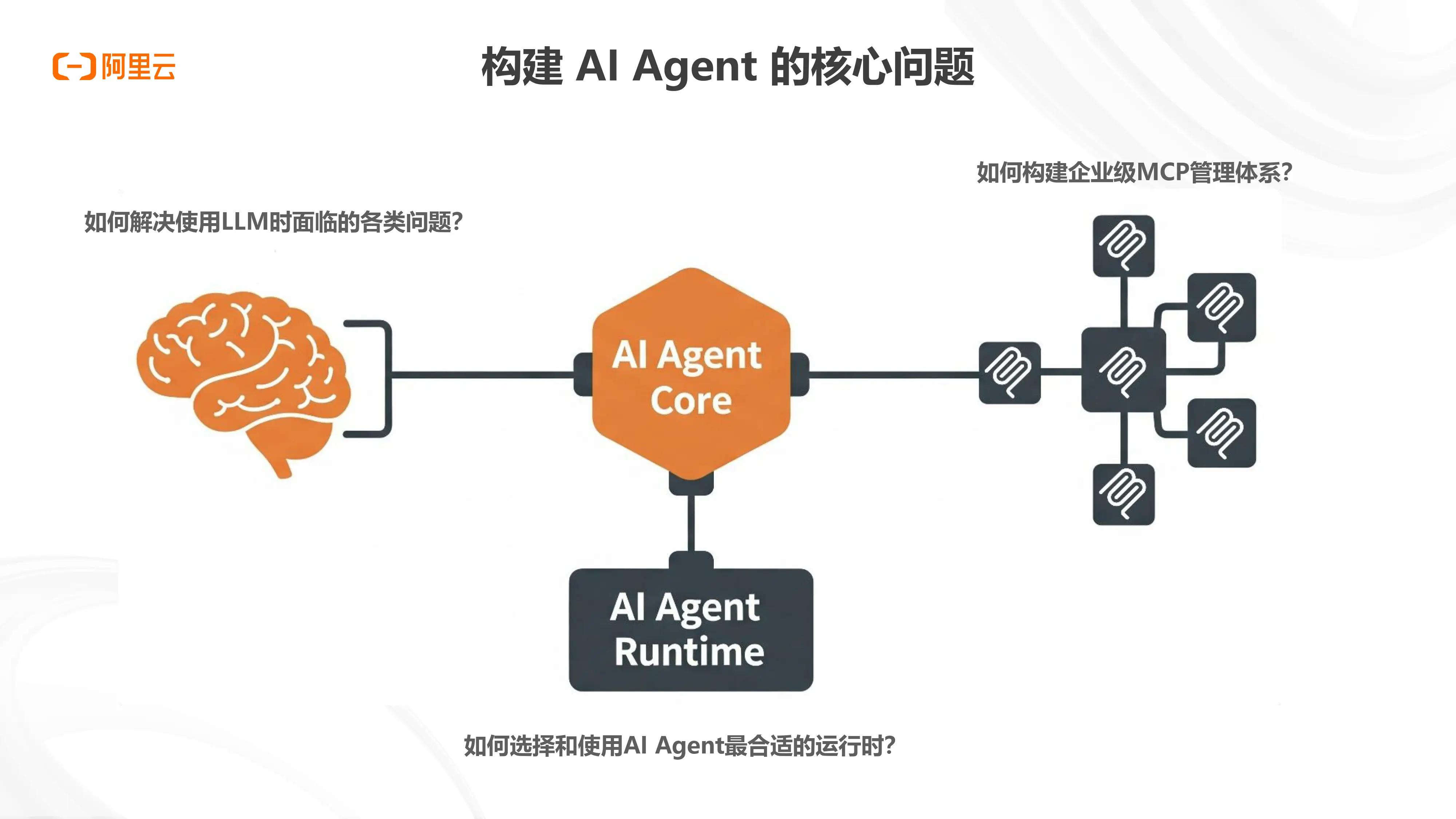

AI Agent is the focus of the next phase of AI development Alibaba Cloud

2028 is also the time point I consider "AI-driven robots being accepted by the public." We have already seen the emergence of AI robots, but they are far from entering the mass market. By 2028, we will see the economic impact they bring.

If we continue to push the timeline forward to 2034, of course, all of the following are just our guesses. Autonomous, AI-driven models and devices will be able to perform very complex collaborative work. At that time, Elon Musk or others could theoretically send robots and AI to Mars and leave them there, setting instructions: "We will return in 50 years to establish a civilization on Mars." When you return in 50 years, they may have understood how to terraform Mars, build buildings, and significantly improve the local environment. At that time, there may be some elements created by them that are different from human civilization.

We believe that by 2034, we will be able to technically achieve these things, although there may be some delays in the schedule, but these ideas will become possible. Therefore, we are facing a very radical change.

Observer.com: So, do you think AI, especially large models, will completely understand the physical laws of the real world in a few years?

Lars: I think it will be able to define tasks on its own in a few years. Therefore, when you think about something that self-evolves or recurs, you will find that many elements, AI can self-improve. For example, today, we can adjust AI's performance through parameter tuning, similar to taking out a segment of DNA through biological means and adjusting it to express faster; on a more fundamental level, we can also add new segments of DNA to AI, giving it new capabilities.

At that time, they can also deploy themselves: under the drive of a set goal, they can plan and execute tasks on their own, possibly breaking through many existing limitations. These elements together form part of the huge transformation we are facing.

Observer.com: You believe that innovation is our ultimate resource, and the more people there are, the richer the per capita resources will be. This challenges the traditional Malthusian thinking. Can you give an example of how this principle worked historically, and which fields do you think it is still effective in today's context?

Lars: First, the phenomenon of "resources becoming more abundant" has been studied in great detail nowadays. The precise description of this concept is: the number of hours a person needs to work to obtain a certain quantity of a particular commodity or basic resource. This is called "time price."

A study examined the "time price" of 26 common goods in the United States (such as iron and many other things) over about 170 years from 1850 to 2018. The results showed that the working hours required for an average American worker to purchase these 26 goods decreased by 98%.

In fact, there is a law similar to Moore's Law: every 20 years, the working time required to purchase the same batch of goods is halved. Many basic commodities have shown this exponential decrease trend over a very long period, and the most classic example is "light." Humans have a strong demand for light, so they have innovated around it from early on. A study shows that the cost of light has decreased by 600,000 times since the Stone Age, yes, 600,000 times.

Next, we may say that, yes, it was like that in the past, but will it continue in the future? If we put this together with the timeline mentioned in "Supertrends" and all our predictions, we will see that it will continue, and may even accelerate. This is because we are focusing on and developing some extremely important revolutionary technologies.

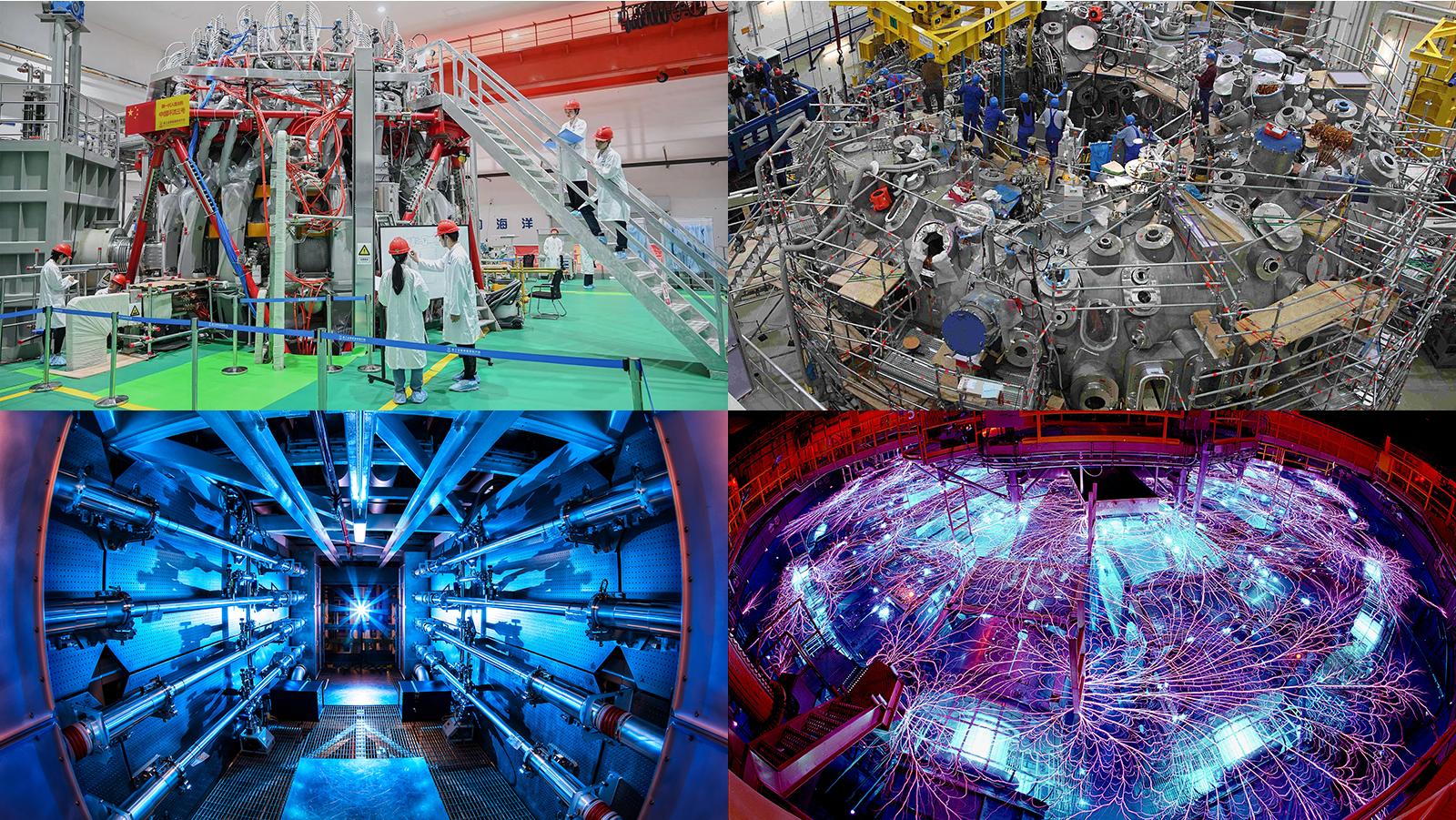

Here, I will give you a few examples. One of them is nuclear fusion. Recently, I visited a controlled nuclear fusion experimental reactor. China is also committed to nuclear fusion research, and there are currently over 40 experimental fusion reactors operating around the world. One company, Helion, claims that they will be able to grid power within three years. We can wait and see. However, most controlled projects state that it will take about 15 years to achieve commercial power supply.

Once nuclear fusion achieves commercial power supply, we will have enough energy for the entire world. The energy provided will be sufficient for people to use until the Earth is destroyed. It is completely safe, completely "free," requires very little and extremely abundant resources, and there is no upper limit. This is an example of energy.

Four routes of controlled nuclear fusion, top left is the tokamak device (China's HL-2A); top right is the stellarator device (Wendelstein 7-X); bottom left is the laser-controlled nuclear fusion device (National Ignition Facility); bottom right is the Z-pinch device (Z Pulsed Power Facility)

But in many other fields, we also see efficiency increasing. I can give you an example that has stood the test of time. Since the birth of agriculture, people found that cultivating the same land continuously would lead to reduced yields, because plants need nitrogen to develop, and cultivation continuously extracts nitrogen from the soil.

So, at that time, people used manure such as cow dung as fertilizer; later, they found that the productivity of these manures could not solve the problem of reduced yields, so they began to use guano found on islands in the Pacific; this source was subsequently exhausted. However, at the same time, people found that they could extract nitrogen from the air through chemical methods and engineer it, and the emergence of fertilizers truly solved the problem at its root.

Throughout history, this kind of thing has happened repeatedly, and we are actually heading toward "super-abundance" of materials. We are increasingly dependent on artificial synthesis rather than directly obtaining things from nature. Even labor is now being "synthesized" through artificial intelligence and robots. This is a fundamental trend that is deeply permeating and changing the way civilization operates, and it has been accelerating.

Observer.com: Through "Supertrends" and your predictive work, you are trying to depict the future of innovation. What methods do you use to distinguish genuine emerging trends from mere hype? How do you explain the unpredictability of breakthrough innovations?

Lars: We have indeed established an analytical system to distinguish trends from hype. I believe that to distinguish hype from trends, the first thing to consider is whether there is a real market demand. Many hypes are driven by people who understand technology, even engineers, who believe their ideas are feasible, but in many cases, they don't discuss whether the technology really has a large enough demand. Therefore, what is truly important is what people actually want.

From a business perspective, we also consider network effects or other amplification mechanisms. Historically, radio was initially considered a tool for "listening to books," and television was initially thought to only play recorded dramas, but later a series of application ecosystems emerged around radio and television based on demand. It turned out that humans would first invent core technologies, and then applications and demands would emerge around them. Therefore, you must simultaneously anticipate breakthroughs in core technologies and predict breakthroughs in application or demand levels.

We are currently using AI for related predictions. In fact, AI's "hallucinations" in answering "what can be done if this core technology is available in five to ten years" have been surprisingly good. It will list a list of "this can be used for X." We then evaluate how many applications may appear and their attractiveness. Based on my experience, AI often comes up with some unexpected yet practical ideas, and these potential application directions are usually quite attractive.

Observer.com: The many positive technologies you advocate will also bring significant economic disruption. In the transitional period when AI capabilities are rapidly expanding and social and economic systems have not yet adapted, how should enterprises and investors respond?

Lars: First, I believe it is beneficial to classify the impact of these technologies in the business field into two categories. The first category is improving the efficiency of existing processes, which also raises the issue of job loss. The second category is thinking about what new things these technologies can create.

Usually, people who are good at handling these two types of matters are not the same: people who are good at improving efficiency are generally not very creative, and vice versa. I think the methods for improving efficiency are quite straightforward: hire some people, sort out all the processes, and analyze how to improve them. As for creative processes, I don't think you should assume that you can handle it yourself in the company or mobilize all your employees.

I think you can create many large organizations to explore creative possibilities. Of course, you can start from within the company: hold competitions, let employees submit proposals; establish a mechanism similar to the TV show "Dragons' Den." Give people the opportunity to pitch their ideas, and if the idea is good, they can get funding. But you can also set up "startup accelerators" and "venture capital capsules." For example, 10 insurance companies can jointly invest in an insurance tech venture capital fund while competing with each other; banks can also do similar things.

In short, I have many ways to bring good ideas to the surface.

If you invest in enough startups, you can judge based on experience: "the business logic of this company makes sense, we are willing to join its team." Once you become part of the team, your shares may also yield considerable returns. The key is to distinguish between "efficiency" and "creativity," because these are indeed two different things.

Observer.com: Do you think the development of AI will produce "superhumans" with super AI, further exacerbating global inequality?

Lars: I think it is difficult to say whether AI will increase or reduce global inequality. One possible scenario is that a small number of people become extremely wealthy due to AI. Take Elon Musk as an example: Musk's personal spending is minimal, and his wealth is mainly concentrated in his companies. Therefore, I don't think Musk is depriving others of their wealth; I think he is just circulating wealth to create jobs and promote innovation. Therefore, this is not a problem. But the more important issue is: what about ordinary people? Many people will lose their jobs due to AI and find it difficult to find a new job.

Musk

However, AI development also has positive aspects. Ordinary people can access more knowledge and receive more education through AI. The education sector must adapt to this change. You, me, and many others have been using AI to learn various content. Traditionally, we would spend several years in education and then use what we learned to earn money and advance in our careers. But this learning model has ended, and we need lifelong learning.

The entry of AI into the education field also brings a possibility. Suppose you are born in a poor area, don't know any big people, but you can access the same models as professors in Shanghai or Boston. This provides great opportunities for anyone with a smartphone or computer.

Observer.com: Given China's massive investments in artificial intelligence, and your emphasis on critical density as a driving force, how do you view China's position in the global development of super intelligence? What unique advantages does China have, and what challenges does it face?

Lars: First, if we look at the advantages that China possesses, I will mention a point that is often overlooked - electricity. If you have heard the statements of Google's CEO and other US tech leaders, you will recognize that in their eyes, the ultimate cost of AI is the cost of electricity. And China's advancement in power infrastructure construction is far ahead of any other country in the world.

Last year, China's investment in smart grids exceeded the total of all other countries in the world, and we indeed need a lot of electricity. As a reference, the AI factories planned to be built in the US over the next decade require more power than 100 nuclear reactors. Therefore, these AI factories will initially not mainly rely on nuclear reactors for power, but will likely primarily depend on natural gas, and later may gradually increase the proportion of nuclear energy. In contrast, electricity is China's strength, and Chinese electricity prices are lower.

The second advantage of China is relatively more relaxed data sharing rules. AI training requires data and electricity; the more comprehensive the data sharing, the faster the development of AI.

The third advantage of China, which I consider the most important, is the scale of talent. In China, there are a large number of people interested in technology and receiving good technical education. About half of the students majoring in STEM (science, technology, engineering, and mathematics) globally are in China, so China has a very high concentration of technical expertise, which is a strength.

We can more intuitively feel China's advantage in AI talent through US data. There are about 100,000 full-time AI professionals in the US, 30% of whom were born in China, which is astonishing. But more astonishingly, about half of the top AI scientists in the US were born in China.

I believe that the main commercial value of AI will not be reflected in large language models. They are very sensitive to input information and often randomly choose how to respond. Large models have no obvious network effect, no strong brand value, lack key intellectual property patents, and have high training and operational costs.

Therefore, I believe that the commercial value of large models will be realized in the era of agentic AI (agentive artificial intelligence), which is when AI is deployed in specific industries. That means when you walk into a specific company, such as a media company or a pharmaceutical company, and say, "We want to integrate AI into this process," you need to combine, test, adapt, and enable self-learning of hundreds of different AI agents in a specific scenario.

There are millions of processes in various sectors of society waiting to be deployed with AI. This extensive deployment is not a one-time effort but requires systematically sorting out enterprise processes, customers, and goals, then embedding AI systems and continuously optimizing them, which is time-consuming.

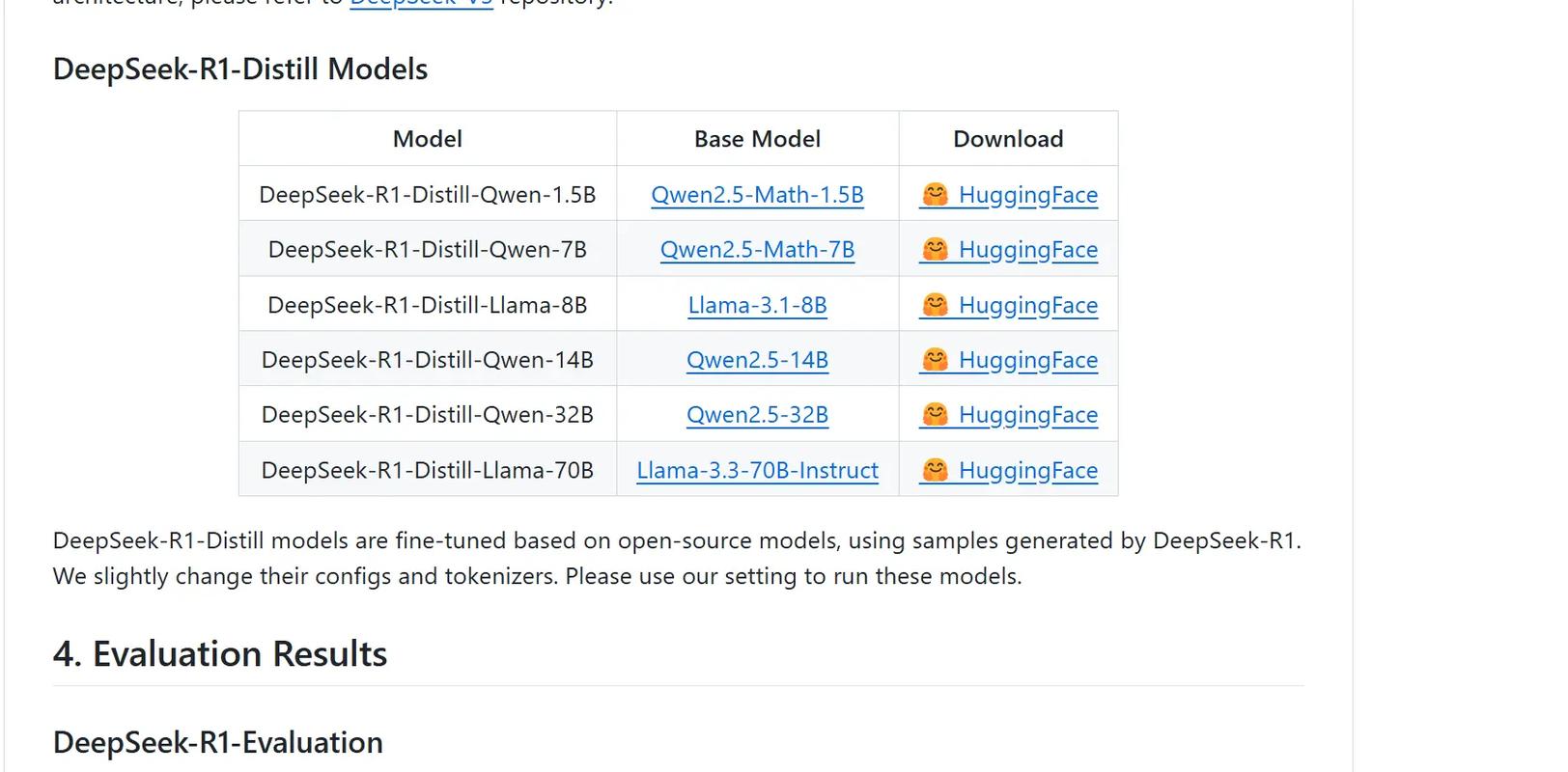

Various models that can be deployed locally after distillation

This also means that if you have 1 million people who are really good at this, your AI deployment will be fast, and your GDP will quickly rise; if you only have 10,000, your deployment process will lag, and your GDP will not quickly rise. Who has 1 million relevant talents? China. So I think this is a very big advantage.

Next, let's talk about China's shortcomings. The first shortcoming is chips. Taking NVIDIA as a benchmark, I think by 2027, China's chips will reach the level of NVIDIA today; but by then, NVIDIA will have advanced further. It's hard to say when China can catch up or even surpass it. My rough judgment is that it may take about 10 years. Currently, Chinese chips are about 40% behind NVIDIA in performance, and both are advancing exponentially, which is a big gap.

Of course, looking back at the stock price fluctuations caused by DeepSeek's release, we should realize that as software evolves, hardware's importance for AI may not be as "absolute" as expected. Initially, some people questioned its use of "unauthorized" NVIDIA chips for training, and I knew nothing about it; subsequently, DeepSeek published a paper explaining the optimization path and made it public.

Therefore, even if in the future, the absence of the fastest, "smartest" general-purpose large language models does not affect much. I think it is more important to deploy large models with expert depth and industry insights into specific fields and achieve practical effectiveness.

Observer.com: I want to delve deeper into large models. I may have different views from you. From the current perspective, it seems that the "Scaling Law" (the scaling law of large models) has reached a limit. The amount of data accessible to large models is also approaching the upper limit. What will be the future of large models? Will we replace the current large model paradigm with other algorithmic approaches?

Lars: I think the basic concept of large language models may change. Currently, when you give it a task, it goes through a processing process, and due to storage issues, it forgets related knowledge. If it has stronger memory, it can self-teach more efficiently.

Regarding the "data depletion" issue you mentioned, I think there are solutions, which are called "data walls" (data wall) for AI. The amount of data on the Internet is still growing, and useful data grows by about 5% each year, which is not much; and training data is gradually catching up with it.

Therefore, the key to solving the problem is to let AI simulate, that is, self-play. One of the most attractive examples early on was AlphaGo. It first trained on a vast number of historical chess records, and then based on this experience, played against itself to improve its abilities. A year later, they launched AlphaGo Zero, an AI that started from scratch. Initially, it played like a beginner, but after 3 days, it reached the level of a world champion, and after 40 days, it completely defeated the previous model.

Currently, many models use similar self-play training. For example, Waymo's 99.9% of autonomous driving training happens in simulation environments, and they can simulate 35,000 times faster than real-world speeds. Also, AI models can find protein folding patterns millions of times faster than humans.

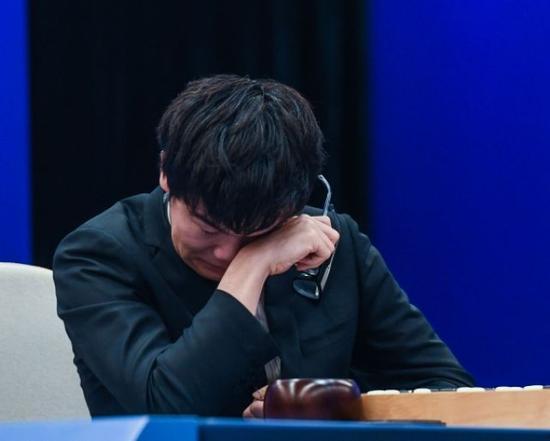

Ke Jie lost to AlphaGo in 2017

Similarly, you can also model and self-play economic systems, political systems, and so on. In fact, AI can achieve self-play in almost any field to create a vast array of possibilities. This is a discipline known as system dynamics, which is still in its infancy, but this means that large language models can accumulate far more knowledge than any human has ever released in any database or on the internet. I think this is very important.

On the other hand, embodied intelligence such as AI robots in the physical world can also learn in real-time. We can connect their systems and use their learning outcomes to promote the development of AI models. In fact, if there are millions or even hundreds of millions of robots, from self-driving cars to homes, offices, and restaurants, they not only learn how the world works in a way that the current internet cannot, but also capture changes in real-time as the world continues to change, and use this to supplement the shortcomings of these models.

Therefore, I do not think the "data wall" is insurmountable; these models will capture and automatically create information. I am quite optimistic about the development of large language models.

Observer.com: But some papers have indicated that using AI-generated data to train large models leads to "poisoning" and deepens biases. What do you think about this?

Lars: Yes, I understand your logic. We default that AI-generated data is correct, and once these data are wrong, AI will be trained on incorrect data, leading to more incorrect data that amplifies bias.

But I think this can be resolved through engineering. The development of large language models can address many problems. For example, with the emergence of GPT-5, we have a multi-model routing system (mixed expert system, MoE). When you give a prompt, the system determines which model this prompt should be sent to.

We have also seen that recent models like DeepSeek and Claude are better at handling AI hallucinations compared to earlier versions. When you don't need AI hallucination, they can constrain the relevant parameters, and when you need them to be imaginative, they can release the relevant parameters. For example, when I say, "Please draft a good slogan for something," it will call upon a more imaginative path.

Just before our meeting, I asked AI a question: "Was there a period in world history when the population of the Roman Empire and the Chinese Empire accounted for two-thirds of the world's population?" It gave the correct answer. That is, the model is improving in distinguishing when to seek truth and when to diverge, and it performs what I call red team-style (red team, which refers to groups that act as enemies or competitors in military exercises, cybersecurity exercises, etc.) adversarial reviews: actively questioning "Is this really correct?" to conduct reviews and re-verifications.

Certainly, the issue you mentioned does exist, but I think AI will continue to improve in this area.

Observer.com: You believe that the development of intelligence will inevitably transcend borders, so how important is international cooperation in the development of artificial intelligence? What risks would fragmented, competitive approaches in the super-intelligence field bring?

Lars: I believe international cooperation is very useful. However, to some extent, I have some doubt about whether smooth international cooperation can be achieved, because competition between countries is very intense. However, there have been some positive signs, such as a universal protocol for data exchange between robots. This is a good direction.

As for myself, the most important international cooperation I need to promote is copyright. The reason is simple: AI is closely related to national security, wealth, and success. People will realize that the more relaxed the copyright control over various kinds of knowledge, the faster your AI model will evolve; and if you completely relax copyright, AI will evolve at the fastest speed.

As for myself, I support making copyright laws for various cultural and knowledge products more relaxed. If China, the United States, the European Union, South Korea, and Japan can agree on the rules of copyright law, it would be more beneficial. I don't know if this will happen, but I think it would be extremely useful.

In the United States, the chairman of the President's Council of Advisors on Science and Technology, David Sacks, is leading AI policy. He is a very successful entrepreneur and venture capitalist with a deep understanding of AI's business and technology. He is working on making AI laws unified and simple, and his goal is to tell everyone: in the next 10 years, we don't plan to modify them, although I don't think this goal can be achieved, but his idea is to make AI regulations as simple and harmonious as possible.

Many people will say: "Using my books, others' music, and other content to train AI is stealing our property." But I have used AI a lot in my book. I see AI as a creative piano that everyone can "play." I think we should allow AI to absorb content as much as possible and use it in a broader range without paying countless copyright fees; because it will become a platform for all humanity, and its achievements will benefit everyone.

"The DeepSeek Moment" after which more people in China have come into contact with AI

Observer.com: If artificial intelligence surpasses human cognition in all areas, what goals and contributions should humans bear in the process of AI development? How should we maintain our self-worth and autonomy in the age of super intelligence?

Lars: First, from a higher perspective: if humans really create hyperintelligence, and this hyperintelligence eventually spreads throughout the universe, even becoming "cosmic consciousness," then we are the origin of everything. Therefore, the first thing I want to say is: we should cherish our role in creating everything. But this has no direct connection with our daily lives.

Returning to the daily level, I think we should pursue those values that are both unique and pleasant, so that we can continue to uphold the unique value of humans in a world where most GDP is created by AI: for example, empathy, curiosity, and creativity. At that time, everyone can explore their self-value: Am I a writer? Am I an artist? Do I like taking care of children? Do I want to help homeless dogs? Do I want to rebuild extinct nature? Things like that.

We need to realize that humans have consciousness, while AI does not. We can experience the world, while AI cannot. This means that we can create new things for each other based on our subjective experiences of the world, and transform the world.

I sometimes compare the current situation to the relationship between humans and cats and dogs. Initially, when humans began to live with dogs, they were still wolves. We lived with dogs because they could protect us, and we fed them. But now we don't need dogs to protect us; we just like dogs. Similarly, we originally kept cats because they could catch mice; but now mouse infestations are not common, but we still like cats.

If I have 10 dogs and 10 cats at home, this will not increase my productivity; in fact, it will decrease my productivity, because taking care of cats and dogs is time-consuming and expensive. Similarly, simply increasing the population does not necessarily increase the productivity of technology. But humans can be kind, and we can inspire and encourage others with kindness.

In real life, online music is almost free: I subscribe to Spotify, Apple Music, and many other services. Despite this, I still really enjoy going to concerts and going to jazz bars, watching three musicians playing in front of me. The music they play may be the same, but I can see them, which is a completely different experience, because they are human. Therefore, we must focus on real interactions between people.

Thus, the lifestyle of the future will be very different; but we will also have the opportunity to provide more basic public services for free. In the future, not only education and healthcare may be free, but also basic free transportation, basic free internet, and basic free AI services. That is to say, the basic needs of people will be largely met; if they want more, they will need to work to achieve it.

This article is an exclusive article by Observer.com. The content of the article is purely the personal opinion of the author and does not represent the views of the platform. Unauthorized reproduction will result in legal liability. Follow Observer.com WeChat guanchacn to read interesting articles every day.

Original: https://www.toutiao.com/article/7572379565105955374/

Statement: This article represents the personal views of the author. Please express your attitude by clicking on the [Up/Down] buttons below.